An ask: If you liked this piece, I’d be grateful if you’d consider tapping the “heart” 💚 in the header above. It helps me understand which pieces you like most and supports this newsletter’s growth. Thank you!

🦠 From Biomolecules to Bytes

Bioinformatics and machine learning are unveiling the mysteries of biology on an unprecedented scale. They are catalysts propelling us toward a future unlike anything we could have imagined just a decade ago - One with truly personalized medicine, where formerly extinct species walk the earth, where you can order a burger grown in a lab, and where the limit to what cells can manufacture is our imagination. Some even assert that, in essence, all biology is computational biology1. Yet, amidst the buzz and enthusiasm enveloping these fields, it becomes all too easy to overlook the driving force propelling this scientific revolution forward — data.

Data is not merely the passive fuel for bioinformatics and machine learning endeavors; it is the lifeblood, an integral element woven into the very fabric of model development. It stands as a singular differentiator, shaping the essence of each project. In this article, we'll delve into the origins of the data that fuels countless bioinformatics projects, unraveling the process of converting biological signals into digitized outputs — the transformation of biomolecules into bytes. To demonstrate this concept, we'll use the example of next-generation sequencing, which is used to transform DNA samples into numerical outputs. However, the same principles will apply across various technologies, from microarray analysis to RNA-seq and beyond.

🦠 What is Next-Generation Sequencing (NGS)?

Next-generation sequencing (NGS), also known as high-throughput sequencing, is a powerful and efficient technology that enables the rapid sequencing of DNA or RNA. Unlike traditional Sanger sequencing, NGS allows for the parallel sequencing of millions of DNA fragments, producing massive amounts of data in a relatively short time.

The most common brands of next-generation sequencing instruments include Illumina, Oxford Nanopore Technologies, and Pacific Biosciences (PacBio). Illumina, in particular, has been a pioneering force in the NGS landscape, driving innovation and setting industry standards.

If you’re interested in learning how Illumina captured the NGS market like no other company before it, it’s worth reading

’s deep dive on this topic titled Illumina: The Measurement Monopoly.

These technologies have enabled a whole new field of biotech companies, ranging from startups aiming to revolutionize personalized medicine to established players pushing the boundaries of genomics. For example, Nebula Genomics utilizes Illumina sequencers to decode the human genome, allowing them to identify genetic variations and provide insights into personalized health. Another notable player, Cajal Neuroscience, utilizes a unique combination of next-generation sequencing, imaging technologies, and multi-omics techniques to identify what is happening in a degenerating brain, where it happens, and when.

🦠 How Does Next-Generation Sequencing Work?

In next-generation sequencing, we take DNA or RNA samples obtained by sample preparation techniques, such as DNA extraction or RNA isolation, and digitize that data through a series of steps that convert biomolecules into digital representations, ultimately resulting in FASTQ2 files containing nucleotide sequences and corresponding quality scores. In this section I’ll give an overview of the entire, end-to-end, next-generation sequencing process from sample extraction to data analysis.

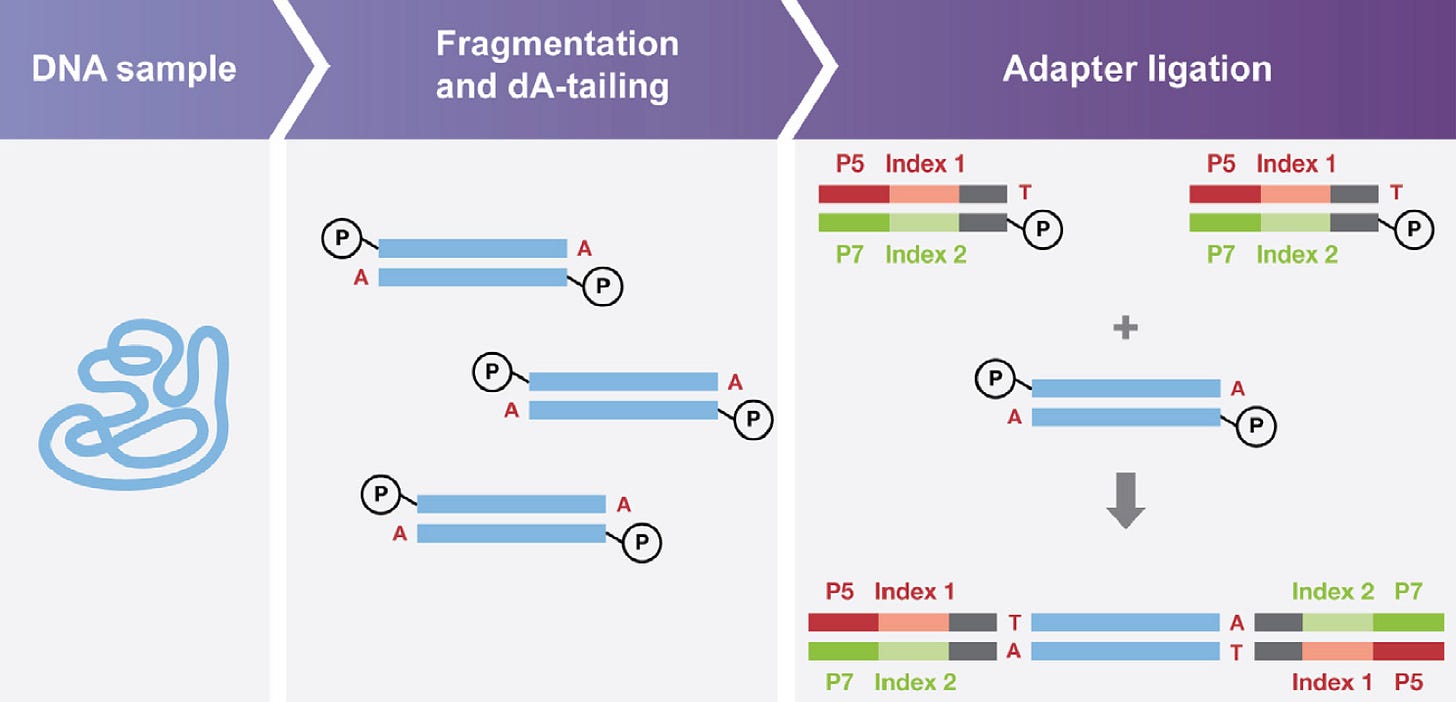

Before performing NGS analysis, DNA or RNA is extracted from biological samples, including tissues, blood, or cells. The specific extraction method varies, but it generally involves breaking open cells to release the genetic material and isolating it from other cellular components. After extraction, the DNA or RNA is often too long for many experimental purposes. As a result, it must be fragmented, which involves breaking the long strands of genetic material into smaller, more manageable pieces through physical or enzymatic methods. Lastly, adapters are attached to the ends of the fragmented DNA or RNA to create a sequencing library. Adapters are short, synthetic DNA sequences that serve as attachment points for subsequent steps in the sequencing process. They allow the sequencer to recognize and bind to the DNA fragments, facilitating the sequencing reaction, as the image below demonstrates.

Next, libraries fashioned in the prior step undergo loading onto a flow cell, which is then positioned on the sequencer over a photodiode (the relevance of this will become clear soon). Here, the DNA fragments assemble into clusters through a process known as cluster generation, producing millions of copies of identical single-stranded DNA fragments. Following this, the sequencing by synthesis (SBS) process unfolds, where chemically modified nucleotides intricately bind to the DNA template strand via natural complementarity. These modified nucleotides are labeled with fluorescent dyes, and a different color dye is used for each of the four nucleotides (A, T, G, C).

As the fluorescently labeled nucleotides are added, a fluorescent signal corresponding to the incorporated base is emitted and recorded with a photodiode, allowing the sequencer to identify and record the specific nucleotide incorporated into the growing DNA strand (remember, each fluorescent label will emit a different color light based on the nucleotide it's bound to, which is recorded by the photodiode). The recorded signals are converted into digital data, forming the raw data generated during the sequencing run.

An aside: This concept is similar to how photodiodes are used in near-infrared spectroscopy (NIRS), a technique that exploits the interaction of near-infrared light with molecules to analyze their composition. In the context of measuring hemoglobin, NIRS utilizes the fact that hemoglobin absorbs and reflects light differently when it is oxygenated and deoxygenated. By shining near-infrared light through the skin and into a muscle, we can infer both the concentration of hemoglobin present and the percentage of it that is oxygenated. My team and I at NNOXX have exploited this phenomenon to measure muscle oxygenation (SmO2) deep inside exercising muscles.

After this initial signal acquisition process, the raw signals are converted into nucleotide 'base calls' by specialized software that can determine the nucleotide composition of a DNA strand based on the different colors of light detected during the signal acquisition phase and the order in which they were detected. Following this base calling process, quality scores are assigned to each detected nucleotide, which are indicators of the confidence level (higher scores correspond to higher confidence). Finally, a digitized file is outputted in a standard format, such as FASTQ, including the sequence information, quality scores, and metadata for each read. This data can then be used for various applications in genomics, transcriptomics, epigenomics, and more. For example, you may want to align the newly acquired sequencing data to a known genome to identify regions of similarity and to identify genetic variations compared to the reference genome, which was the topic of my last article titled A DIY Guide Genomic Variant Analysis.

🦠 Want To Learn More? Check Out The Following Related Newsletters!

Markowetz F. All biology is computational biology. PLoS Biol. 2017 Mar 9;15(3):e2002050. doi: 10.1371/journal.pbio.2002050. PMID: 28278152; PMCID: PMC5344307.

There are three primary data formats you’ll encounter in bioinformatics. Reference data formats, results formats, and experimentally obtained data formats. Reference data formats capture prior knowledge and include FASTA files and GFF files, for example. Results files are data generated by specific analysis, and include files types including VCF and BAM. The third type of format (experimentally obtained) is the most heterogenous, and includes common data formats from processes such as NGS, such as FASTQ.