Machine Learning Pipelines For Workflow Automation

Codifying Best Practices For Automating Machine Learning Workflows

My most recent handful of Decoding Biology newsletters have been about machine learning model development. Model development is about creative problem-solving and discovering what does or does not work - this includes data collection and processing, algorithm spot-checking, training a machine learning model, evaluating a model, and tuning it to improve its performance.

Generally, model building and refinement is the most labor-intensive step toward deploying a functional machine learning model. On the other hand, creating a pipeline is all about codifying your process to automate the workflow mentioned above.

The image below visually represents the varying steps between identifying what type of problem you’re working with and deploying a functioning machine learning model that makes accurate predictions on unseen data:

As previously stated, the most recent handful of Decoding Biology newsletters have been about developing, tuning, and evaluating machine learning models. This newsletter, however, is about what to do after you’ve created a model and have it working well enough to deploy, which is where pipelines come in.

Pipelines are about codifying experimentally-derived best practices and automating the workflow to produce your machine learning model. Machine learning pipelines consist of multiple sequential steps that do everything from data extraction and preprocessing to model training and deployment.

The following coding example demonstrates how to create a pipeline that makes accurate predictions about new unseen data. For this demonstration, let’s assume the following:

Linear discriminate analysis (LDA) is the best-performing algorithm for our problem;

k-fold cross-validation and logistic loss are the most helpful evaluation method and metric, respectively; and

Principal component analysis (PCA) and univariate selection (SelectKBest) are the most useful feature selection methods for selecting features in the dataset that best contribute to predicting the desired outcome variable in our model.

Which produced the following output:

Negative Logistic loss represents the confidence for a given algorithm's predictive capabilities and is scored from 0 to -1. Additionally, correct and incorrect predictions are rewarded in proportion to the confidence of the prediction. The closer the negative logloss score is to -1, the more the predicted probability diverges from the actual value. Alternatively, a logloss value of 0 indicates perfect predictions.

The negative logloss value above corresponds to a classification accuracy of roughly 78% (in this specific instance), meaning that ~8/10 predictions are made correctly.

Assuming we’re happy with this models performance we can use it to make predictions on new data. Since we’re using the Pima Diabetes dataset, we’ll enter in values for the following features in order to predict whether an individual has diabetes (input values in brackets):

Number of times pregnant [2]

Plasma glucose concentration two hours into an oral glucose tolerance test [155 ng/dl]

Diastolic blood pressure [95 mmHg]

Triceps skin fold thickness [15mm]

2-hour serum insulin concentration [0 mu U/ml]

Body mass index [32.9]

Diabetes pedigree function [0.55]

Age [40]

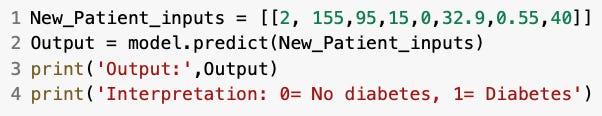

The above values are stored in the New_Patient_inputs label and an output is predicted, as demonstrated in the code below:

Which results in the following output:

In theory, we could use this model to intake a patient's data, as outlined above, and predict whether or not the individual has diabetes. However, in practice, we would want to train our model on a much broader dataset with individuals of various ages, sexes, socioeconomic statuses, ethnicities, and so forth to ensure we're getting a realistic sampling of the population we wish to deploy the model with. Additionally, we would want to tune our model to increase its performance to avoid type 1 and type 2 errors, which could be highly detrimental when the model is used to make medical and/or lifestyle decisions.

👉 If you have any questions about the content in this newsletter please let me know in the comment section below.

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack. The button is located towards the bottom of this email.

👉 If you enjoy reading this newsletter, please share it with friends!